Caching vs Content Delivery Networks – What's the Difference?

In the world of network optimization, Content Delivery Networks (CDNs) and caching play a vital role in improving website performance and user experience. And while both aim to speed up website loading times, they have distinct purposes and mechanisms. In this tutorial, we'll dive deep into the details of CDNs and caching to understand their similarities, differences, and how they contribute to enhancing online experiences.

What is Caching?

Imagine you’re a librarian managing a popular library. Every day, readers come in asking for the same set of books like “Think and Grow Rich” or “The Intelligent Investor.”

Initially, you fetch these books from the main shelves, which takes time and effort. But soon, you notice a pattern: the same set of books are requested repeatedly by different readers. So, what do you do?

You decide to create a special section near the entrance where you keep copies of these frequently requested books. Now, when readers come asking for them, you don’t have to run to the main shelves each time. Instead, you simply hand them the copies from the special section, saving time and making the process more efficient.

This special section represents the cache, storing frequently accessed books for quick retrieval.

Caching is a technique used to store copies of frequently accessed data temporarily. The cached data can be anything from web pages and images to database query results. When a user requests cached content, the server retrieves it from the cache instead of generating it anew, significantly reducing response times.

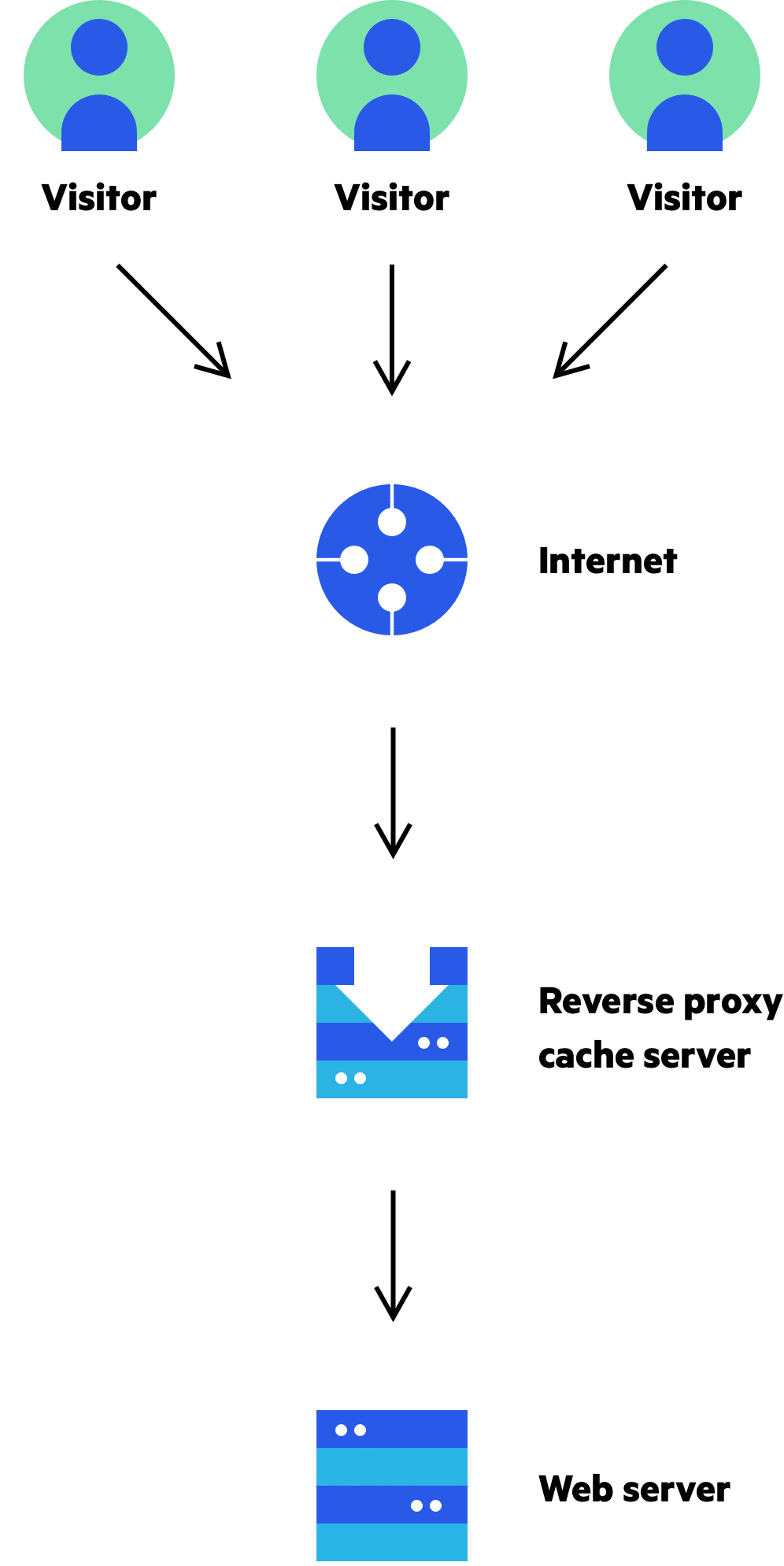

When a web server receives a request, it can follow different caching strategies to handle it efficiently. One prevalent strategy is known as read-through caching:

- Request Received: The web server gets a request from a client.

- Check Cache: It first looks into the cache to see if the response to the request is already there.

- Cache Hit: If the response is in the cache (hit), it sends the data back to the client right away.

- Cache Miss: If the response isn’t in the cache (miss), the server queries the database to fetch the required data.

- Store in Cache: Once it gets the data from the database, it stores the response in the cache for future requests.

- Send Response: Finally, the server sends the data back to the client.

What to Consider When Implementing a Cache System

Decide When to Use a Cache:

- A cache is best for frequently read but infrequently modified data.

- Cache servers are not suitable for storing critical data as they use volatile memory.

- Important data should be stored in persistent data stores to prevent loss in case of cache server restarts.

Set an Expiration Policy:

- Implement an expiration policy to remove expired data from the cache.

- Avoid setting expiration dates too short (to prevent frequent database reloads), and too long (to prevent stale data).

Maintain Synchronization Between Data Stores and Cache

- Inconsistencies can arise due to separate operations on data storage and cache, especially in distributed environments.

Mitigate Failures:

- Use multiple cache servers across different data centers to avoid single points of failure.

- Over-provision memory to accommodate increased usage and prevent performance issues.

Implement an Eviction Policy:

- When the cache is full, new items may cause existing ones to be removed (cache eviction).

- A popular eviction policy is Least Recently Used (LRU), but other policies like Least Frequently Used (LFU) or First In, First Out (FIFO) can be chosen based on specific use cases.

Real-World Applications of Caching

**Social Media Platforms:** Imagine scrolling through your Facebook feed. Thanks to caching, you see profile pictures, trending posts, and recently liked content instantly, even if millions of users are accessing the platform simultaneously.

Caching these frequently accessed elements on servers or your device minimizes delays and makes the experience smoother and more engaging.

**E-commerce Websites:** When browsing Amazon for a new gadget, you expect a seamless shopping experience. Caching plays a crucial role here. Product images, descriptions, and pricing information are cached, enabling the website to display search results and product pages rapidly.

This is especially crucial during peak seasons like Black Friday or Cyber Monday, where caching helps handle surges in traffic and ensures customers can complete their purchases without encountering delays.

**Content Management Systems (CMS):** Millions of websites rely on CMS platforms like WordPress. To ensure smooth performance for all these users, many CMS platforms integrate caching plugins. These plugins cache frequently accessed pages, reducing the load on the server and database.

This translates to faster page loading times, improved SEO ranking due to faster indexing by search engines, and a more responsive website overall, providing a better experience for visitors.

What is a Content Delivery Network (CDN)?

Now, think of a CDN as a global network of book delivery trucks. Instead of storing all the books in one central library, you have local branches worldwide, each with copies of the most popular books.

When readers request a book, you don’t have to ship it from the main library. Instead, you direct them to the nearest branch, where they can quickly pick up a copy. This cuts down on travel time (data transfer time) and keeps everyone happy with fast access to their favorite books.

In technical terms, a CDN is a network of servers distributed across various locations globally. Its primary purpose is to deliver web content, such as images, videos, scripts, and stylesheets to users more efficiently by reducing the physical distance between the server and the user.

How CDNs Work:

First, imagine that User A wants to see an image on a website. They click on a link provided by the CDN, like “https://mywebsite.cloudfront.net/image.jpg". This requests the image.

Then, if the image isn’t in the CDN’s storage (cache), the CDN fetches the image from the original source, like a web server or Amazon S3.

In response to that, the original source sends the image back to the CDN. It might include a Time-to-Live (TTL) header, indicating how long the image should stay cached.

Next, the the CDN stores the image and serves it to User A. It stays cached until the TTL expires.

Then let's say that user B requests the same image. At that point, the CDN checks if it’s still in the cache. If the image is still cached (TTL hasn’t expired), the CDN serves it from there (a hit). Otherwise (a miss), it fetches a fresh copy from the origin.

What to Consider When Implementing a CDN

- Cost Management: CDNs charge for data transfers. It’s wise to cache frequently accessed content, but not everything.

- Cache Expiry: Set appropriate cache expiry times. Too long, and content might be stale. Too short, and it strains origin servers.

- CDN Fallback: Plan for CDN failures. Ensure your website can switch to fetching resources directly from the origin if needed.

- Invalidating Files: You can remove files from the CDN before they expire using various methods provided by CDN vendors.

Real-World Applications of a CDN

**Video Streaming Services:** Imagine you're in Sydney, Australia, craving to watch the latest season of your favorite show on Netflix. Without a CDN, the data would have to travel all the way from a server in, say, California, leading to buffering and frustrating delays.

But thanks to CDNs, Netflix caches popular content on edge servers closer to you, in Sydney or its surrounding region. This significantly reduces the distance the data needs to travel, ensuring smooth playback and an uninterrupted viewing experience, regardless of your location.

In fact, studies show that CDNs can **reduce video startup time by up to 50%**, making a significant difference in user satisfaction.

**Gaming Content Distribution:** Gamers know the pain of waiting for massive game updates or DLC downloads. But companies like Steam and Epic Games leverage CDNs to make things faster.

These platforms cache game files, updates, and multiplayer assets on edge servers close to gaming communities. This means whether you're downloading a new game in New York or patching your favorite title in Tokyo, the data doesn't have to travel across continents.

Using CDNs can decrease download times quite a bit, leading to quicker access to the games you love and smoother multiplayer experiences with minimal lag.

**Global News Websites:** Staying informed about global events shouldn't be hindered by slow loading times. Major news organizations like BBC News and The New York Times use CDNs to ensure their breaking news updates and multimedia content reach audiences worldwide instantly.

By caching critical information like articles, videos, and images on servers across different continents, CDNs enable news websites to deliver real-time updates quickly, keeping readers informed regardless of their location.

During major events or emergencies, this can be especially crucial, as evidenced by a case study where a news organization using a CDN reported a **20% increase in website traffic without any performance issues** during a breaking news event.

Caching vs CDNs – What's the Difference?

Similarities between caching and CDNs:

Improved Performance: Both CDNs and caching aim to enhance website performance by reducing latency and speeding up content delivery.

Efficient Resource Utilization: By serving cached or replicated content, both approaches help optimize resource utilization and reduce server load.

Enhanced User Experience: Faster load times lead to a better user experience, whether achieved through CDNs or caching.

Differences between Caching and CDNs

Scope:

- CDNs: CDNs are a network of servers located in different geographic locations around the world.

- Caching: Caching is a method of storing web content on a user’s local device or server.

Implementation:

- CDNs: CDNs require a separate infrastructure and configuration.

- Caching: Caching can be implemented within a web application or server using caching rules and directives.

Geographic Coverage:

- CDNs: Designed to deliver web content to users across the world.

- Caching: Typically used to improve performance for individual users or within a local network.

Network Architecture:

- CDNs: Use a distributed network of servers to cache and deliver content.

- Caching: This can be implemented using various types of storage such as local disk, memory, or a server-side cache.

Performance Benefits:

- CDNs: Provide faster and more reliable content delivery by caching content in multiple locations.

- Caching: Improves performance by reducing the number of requests to the origin server and delivering content faster from a local cache.

Cost:

- CDNs: Can be more expensive to implement and maintain due to the need for a separate infrastructure and ongoing costs for network maintenance.

- Caching: Can be implemented using existing infrastructure and server resources, potentially reducing costs.

When to use Caching

Caching is ideal for frequently accessed content that doesn't change frequently. This includes static assets like images, CSS files, and JavaScript libraries.

It's particularly effective for websites with a substantial user base accessing similar content, such as news websites, blogs, and e-commerce platforms.

Caching can also significantly reduce server load and improve response times for users, especially in scenarios where content delivery latency is a concern.

When to use CDNs

CDNs are invaluable for delivering content to a global audience, especially when geographical distance between users and origin servers leads to latency issues.

They are well-suited for serving dynamic content, streaming media, and handling sudden spikes in traffic.

CDNs also excel in scenarios where content needs to be delivered reliably and consistently across diverse geographic regions, ensuring optimal user experience regardless of location.

Combining Caching and CDNs

In many scenarios, employing both caching and CDNs together yields optimal results, particularly for dynamic websites and applications where a mix of static and dynamic content delivery is essential. Let's consider a popular news website as an example.

Imagine a bustling news website that regularly publishes breaking news articles, accompanied by images and videos. While the core news content is dynamic and frequently updated, the images and videos associated with older articles remain relatively static and are accessed repeatedly by users.

To address this, the website can implement a combined strategy:

- Caching on the Origin Server: Frequently accessed elements like website templates, navigation menus, and static content are cached directly on the origin server. This caching reduces server load and enhances performance for initial page loads.

- CDN Caching: The website leverages a CDN to cache frequently accessed images and videos associated with news articles on edge servers located worldwide. This ensures that users, regardless of their geographic location, can swiftly access these elements with minimal latency.

There are many benefits of the combined approach, such as:

- Faster Loading Times: By serving cached content from both the origin server and CDN edge servers, users experience significantly faster loading times, leading to a more engaging browsing experience.

- Reduced Server Load: Caching alleviates pressure on the origin server, enabling it to efficiently process dynamic content updates while serving static elements from cache.

- Improved Global Reach: The CDN ensures that users worldwide can access the website and its content with minimal delays, irrespective of their proximity to the origin server.

But there are also some factors to consider:

- Cache Invalidation: Regularly updating cached content ensures users access the latest information. Most CDNs offer efficient cache invalidation mechanisms to facilitate this process.

- Cost Optimization: While combining caching and CDNs enhances performance, it's crucial to evaluate the cost-effectiveness of caching specific content. Analyzing user access patterns helps determine the optimal caching strategy.

By strategically combining caching and CDNs, you and your team can create a robust content delivery infrastructure that delivers a superior user experience worldwide.

Wrapping Up

Both CDNs and caching play crucial roles in optimizing website performance and user experience by speeding up content delivery.

While caching stores frequently accessed data locally for quick retrieval, CDNs provide a geographically distributed network of servers to deliver content efficiently to users worldwide.

Understanding their similarities in performance improvement and resource utilization, as well as their key differences in scope, implementation, and cost is crucial for choosing the right approach for your specific needs.